Launch GPUs using CLI to run an inference model

This blog will explain how to easily access NVIDIA H100 GPUs using SF Compute and run an inference model using a Cog container. We'll use a ResNet50 model for image classification as an example.

SF Compute allows anyone to get flexible access to GPU using the CLI, while Cog enables you to package machine-learning models in a production-ready container. This blog post explains how developers can quickly start serving inferences on H100 GPUs.

Let's first set up a node using SF Compute's CLI. This will deploy an 8xH100s instance with SF Compute's VM.

To get started, sign up at sfcompute.com. Once your account is active, install SF Compute's CLI by following these steps.

curl -fsSL https://sfcompute.com/cli/install | bash

Then, login to the CLI.

sf login # this will open a browser window

Once authenticated, use this command to automatically generate SSH keys, which will be used later when launching SF Compute instances.

sf ssh --init

SF Compute provides flexible access to compute. You can get access to H100 nodes for a couple of hours or longer time periods. In this blog, we will buy a node for two hours, because our inference model is not going to take that long to produce output.

# Buy 2 hours, starting ASAP, on an H100 with IB (H100i)

sf buy -t h100i -d "2hr"

# Buy 2 hours, starting 1 hour from now

sf buy -t h100i -d "1hr" -s "+1hr"

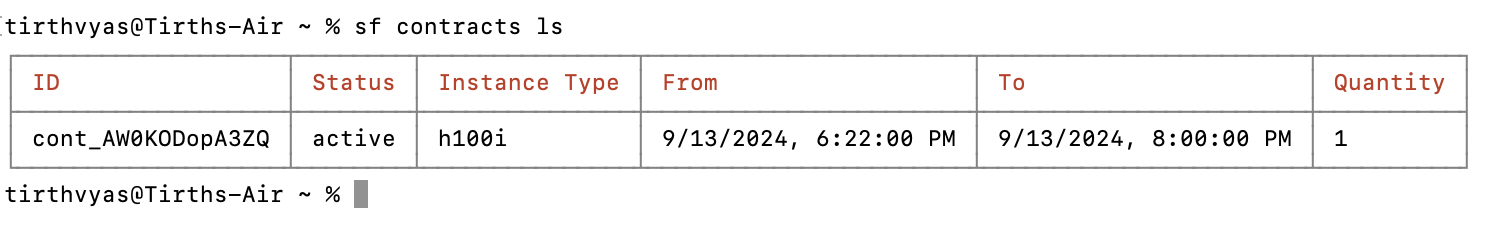

Once you have placed an order, we will work on getting your instance ready for you. If the requested instance quantity is available, we will fill your contract, and you can see the status of your contract using:

sf contracts ls

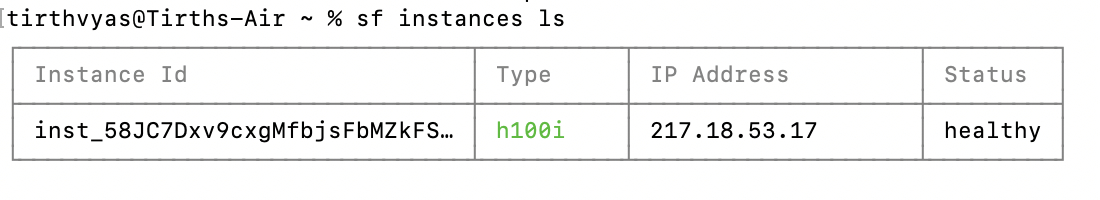

Once the contract is active and instance order is filled, you will see an output like this by issuing the sf instances ls command.

Once your instance is up, you can SSH into it with the following command. Default username is "ubuntu"

sf ssh <your instance id>

At this point, you would have logged into your node and ready to start putting your model to work.

Let's now look into how you can run Cog on your newly created node.

Step 1: Install Cog

First, let's install Cog:

sudo curl -o /usr/local/bin/cog -L \

https://github.com/replicate/cog/releases/\

latest/download/cog_$(uname -s)_$(uname -m)

sudo chmod +x /usr/local/bin/cog

Step 2: Set Up Your Project

Create a new directory for your project:

mkdir cog_inference_project

cd cog_inference_project

Step 3: Create the Cog Configuration File

Create a file named cog.yaml with the following content:

build:

gpu: true

system_packages:

- "python3-pip"

- "python3-dev"

python_version: "3.10"

python_packages:

- "torch"

- "torchvision"

- "Pillow==9.5.0"

- "requests==2.26.0"

run:

- pip install --upgrade pip

predict: "predict.py:Predictor"

This configuration is set up for CUDA 12.2 and Python 3.10, but it's flexible enough to work with other recent versions.

Step 4: Create the Predictor Script

Create a file named predict.py with the following content:

from cog import BasePredictor, Input, Path

import torch

import torchvision.models as models

import torchvision.transforms as transforms

from PIL import Image

import requests

class Predictor(BasePredictor):

def setup(self):

self.device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"Using device: {self.device}")

self.model = models.resnet50(pretrained=True).to(self.device)

self.model.eval()

self.transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

])

LABELS_URL = "https://raw.githubusercontent.com/anishathalye/imagenet-simple-labels/master/imagenet-simple-labels.json"

self.labels = requests.get(LABELS_URL).json()

def predict(self, image: Path = Input(description="Image to classify")) -> str:

img = Image.open(image).convert('RGB')

img_tensor = self.transform(img).unsqueeze(0).to(self.device)

with torch.no_grad():

output = self.model(img_tensor)

_, predicted_idx = torch.max(output, 1)

predicted_label = self.labels[predicted_idx.item()]

return f"The image is classified as: {predicted_label}"

Step 5: Download weights

WEIGHTS_URL=https://storage.googleapis.com/tensorflow/\

keras-applications/resnet/\

resnet50_weights_tf_dim_ordering_tf_kernels.h5

curl -O $WEIGHTS_URL

Step 6: Download a Sample Image

Download a sample image to test your model:

wget https://upload.wikimedia.org/wikipedia/commons/thumb/3/\

3a/Cat03.jpg/1200px-Cat03.jpg -O cat.jpg

Step 7: Run Inference

Now you can run inference on your sample image:

cog predict -i image=@cat.jpg

You should see output indicating the classification of the image. The output should read whatever your model classifies the cat as, for example, "This is a tiger cat."

Troubleshooting

If you encounter any issues:

- Ensure Docker is running:

sudo systemctl status docker - You may have to upgrade your docker version if you are seeing errors when running cog predict command

- Check CUDA availability:

nvidia-smi - Verify Python version:

python3 --version

Conclusion

You've now successfully set up and run an inference model using Cog on 8 NVIDIA H100 GPUs using just the CLI! This setup provides a flexible and reproducible environment for your machine learning projects. You can modify the predict.py script to use different models or adjust the preprocessing steps as needed for your specific use case.